I agree we need expert opinions. But I imagine side-channel attacks, even if directed at extracting the private key, require knowing something about the code that is executing inside the TEE. That is the assumption I base this argument on, and if that is true then not knowing which canister is running within the TEE, or knowing it only for a short time, I imagine would make the side-channel attack harder to execute.

I think another interesting thing to consider is the distinction between types of collusion attacks. There seem to be two main types of attacks: halting a subnet (halt attack) and completely taking over a subnet (takeover attack).

A halt attack requires controlling greater than 1/3 of nodes in a subnet.

A takeover attack requires controlling 2/3 or greater of nodes in a subnet.

As far as I understand the IC, a halt attack will be much less destructive than a takeover attack. Halt attacks I believe would be easily detected, as the majority of the subnet would be able to identify the malicious nodes. Upon detection of the attack, the subnet I believe shuts itself down to protect further damage, until BFT guarantees are restored. I would imagine there is or will be some sort of slashing to penalize or remove the malicious nodes. A halt attack will cause down-time but will not be able to corrupt state (which I would posit is nearly a worst-case scenario).

A takeover attack would be horrendous. In this case, arbitrary state changes can be pushed through, and possibly without detection, since technically BFT has not been violated. It seems reasonable that in this case human beings would need to manually detect this kind of attack, and manual NNS proposals would need to be made to fix the subnet. But at that point it might be too late, data could have been manipulated, money lost, and perhaps even state deleted.

This is just what I imagine could happen based on what I know, those with deeper IC protocol knowledge please correct any false information.

With those explanations above, takeover attacks are clearly worse. What’s interesting is that the shuffling probabilities are much better in regards to takeover attacks. Someone would need to do the math (@rubenhorne @Manu) so we could compare.

But even with those numbers, I think the probabilities would still show that not shuffling gives us a better chance of an honest subnet configuration than shuffling many times would.

So for a static amount of corruption, shuffling hurts. But again, I think the adaptive adversary threat is probably more important. And if the takeover probabilities are acceptable, then shuffling might hurt less than we thought.

TLDR We should try to hide as much information about subnet membership and node and canister configurations as possible.

I don’t want to derail the conversation, but I want to put this here for future consideration once we feel comfortable with the attacks that can be mitigated by shuffling.

Privacy or hiding certain information from node operators could greatly enhance the security of the IC and improve the efficacy of node shuffling. I know some of what I’m about to describe may be extremely difficult to implement or maybe impossible with current technologies, but I think it’s something to strive for.

Basically, I’m trying to imagine the most secure IC possible (within reason). Here’s what it would look like:

Node operators know nothing about the canisters they host nor the subnets they are members of. All they know is that they are running the correct binary of the IC replica in their TEE.

The TEE provides attestations that the blessed replica binary is what is running in every node. Those attestations are included in consensus, so that if a sufficient number of nodes break the attestations then BFT is broken.

The IC unpredictability shuffles the members of subnets and assigns canisters to their initial subnets. Subnet configurations are hidden. Canisters do not even know which subnets they are members of.

I might have taken some of the hiding too far, but the security benefits of hiding certain information I believe are very clear. If a node operator does not know which canisters it hosts and does not even know how many other node operators are in their subnet and cannot know who the other node operators are…how exactly could node operators then collude? TEE would help prevent malicious replica binaries, and shuffling could help prevent any leaks in the hiding (node operators might still create and maintain offline relationships somehow) and side-channel attacks to the TEE.

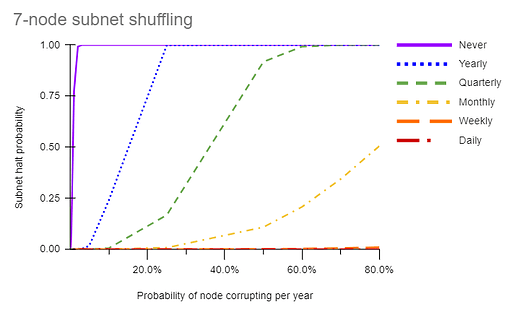

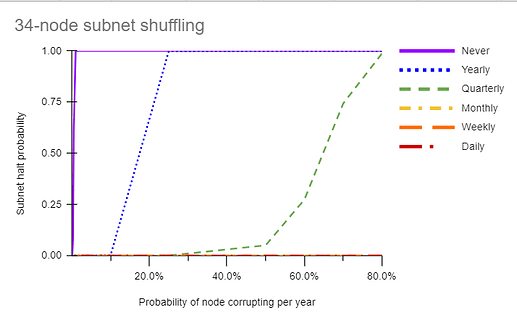

I have generated some charts (again, borrowing @Manu’s fault analysis sheet) that show how shuffling would protect against an adaptive (corruptible) adversary. I assume a constant “probability of a node turning corrupt in a year” from 0.1% to 80%. Then for a 100 year interval, I calculate the probability of a subnet to be halted (surpass Byzantine fault tolerance) for various shuffling intervals (never, yearly, quarterly, monthly, weekly, or daily). (In a separate chart not shown here but at the link, I plot how likely a subnet is to remain “takeover-free” (corruption<2/3) for 100 years for the same shuffling intervals). When reshuffling occurs, I assume that the corruption (in this case, collusion with fellow-subnet members) is reset to 0. Here is the link to the analysis:

What is interesting to me is that shuffling in this case is clearly protective. The more nodes that are on the subnet, the less likely it is for enough of them to corrupt each other to halt the subnet. What is alarming to me is how easily a low rate of corruption like 2%/year can eventually halt (and also takeover) a subnet when it is never shuffled, even for a 34-node subnet.

What seems to be the fundamental question then is which attack do we consider most risky/likely? Is it nodes influencing each other to for evil on a subnet (which we protect against by shuffling) or is it a distributed baseline of evil that we don’t want to randomly match together (for which we would protect by not shuffling or shuffling with blinding).

I think @lastmjs has a point about halts being much less harmful than takeovers. If shuffling protects against takeovers better while ceding ground in halts, I am ok with that.

One take-home message too is that in any case, more nodes are better, and 7 nodes in particular are quite vulnerable to this type of attack.

I hope to post more “takeover” data soon.

Did you mean to say “can eventually halt (and also takeover) a subnet”?

Yes I did. Should be fixed now

I agree. It seems one big takeaway from this discussion is that regardless of how you weight the risks of static vs adaptive node corruption, being able to conceal the identities of subnet members from each other would dramatically protect against both types of threats. Therefore, if feasible, implementing subnet membership concealment should take precedence over shuffling.

If it’s not feasible, then we need to figure out which, if any, shuffling frequency offers the best mix of protections against both static and dynamic threats with as little drawback as possible.

I think this is an important point. Changing the state arbitrarily or executing invalid transactions should indeed be impossible without corrupting 2/3 of the nodes (or 2f+1 to be precise), but I think we can already have bad attacks with only 1/3rd or > f corruptions. This is because we want to reach consensus without making any assumptions on the network. So unlike bitcoin, where things rely on everybody seeing the longest chain, we do not want to make such assumptions. This problem of asynchronous consensus can only be solved with f < n/3 corruptions (see eg wikipedia). That means that also in the internet computer, if you can corrupt f+1 parties and in combination with that you can do a network attack, then you can already “fork” the blockchain (two distinct chains could be finalized). This already allows double-spending attacks. So in summary, I think we should do all calculations aiming to keep f < n/3.

Can you explain this attack in more detail, or provide some external reading? I don’t understand how the finalization could occur here with only f+1 parties and a network attack, since I thought 2/3 (2f+1) are absolutely required to finalize anything. Would love to understand better, thanks.

Also, to add more to why I don’t understand the danger here, I thought that the Internet Computer had mechanisms in place to detect when 2f+1 parties are not able to come to agreement, and that the subnet would then “freeze” in this case, until the malicious nodes can be identified and removed. Is that not the case? How capable is the system at detecting when BFT guarantees are being broken, and what are the mitigations the protocol provides?

Of course! Let me start with a quick recap: So replicas in our consensus protocol create notarization shares to indicate valid blocks. They might create notarization shares for different blocks at one height. When they see a full notarization (which consists of 2f+1 notarization shares), they move on to the next round. If there exists only 1 notarization in a round, that means we have agreement (because chains must consist of fully notarized blocks at every height). To help identify this, notaries also create a finalization share on a block b at the end of the round if they did not create a notarization share on any block at that height other b. If a block b collects 2f+1 finalization shares, we consider it finalized, and replicas trust that this is the agreed-upon blockchain.

This is safe on a subnet of size n = 3f+1 when at most f nodes are corrupt, meaning that a finalization on block b means that no other notarized block b’ at that height can exist. That is because if we have 2f+1 finalization shares, that means those nodes say they did not notary-sign any other block at that height. f of those may be corrupt, so they might have lied, but it means at least f+1 nodes are honest and really did not create notarization shares for other blocks at that height. Since we only have 3f+1 replicas, and we know that f+1 did not create notarization shares for any other block b’ at that height, it only leaves 2f nodes that could have possibly created notarization shares on b’, but this is less than the threshold 2f+1, concluding the proof.

Now the attack: suppose the attacker controls more than f (say f+1) corrupt replicas, and additionally has full control over the network. For simplicity, let’s look at a 4 node subnet consisting of nodes A, B, C, and D, and the adversary controls 2 nodes (which is f+1, more than 1/3rd). The notarization/finalization threshold is 3 for a 4 node subnet. Let’s say A and B are the corrupt nodes. The attacker can make sure there are two valid blocks at height h, b1 and b2. It makes sure that replica C only receives block b1, and replica D only receives block b2 (using the fact that it fully controls the network). C will create a notarization share for b1, and D for b2. Using its control over replicas A and B, the attacker can complete both notarizations for b1 and b2, and show the full notarization on b1 to C and the full notarization on b2 to D. C will now create a finalization share on b1 (since it only created a notarization share on that block), and similarly D will create a finalization share on b2. Now again using A and B, the attacker can complete two finalizations on b1 and b2. This completes the attack: C thinks that block b1 is final, while D thinks b2 is final, and they are conflicting blocks.

So this shows that an attacker having more controlling more than f out of 3f+1 nodes is problematic. You cannot just sign arbitrary blocks, but we cannot guarantee agreement anymore.

As demonstrated by the attack above, if f+1 nodes are actively malicious and the adversary can control the network, then we immediately have a problem. If f+1 nodes are faulty in the sense that they are offline but not actively malicious, then the subnet would be stuck, but the NNS can replace nodes in the subnet.

Building on the theme of semi-random node shuffling, here another idea (somewhat tangential).

Some subnets could be designated as zero-carbon subnets, only using node providers running on renewable energy. Instead of using batteries to deal with supply intermittancy, node-handover is used instead. When renewable power is available at a specific node’s location, it joins the subnet; when not available, it goes dormant. This would decrease operating cost, but increase capital cost because the subnet would need more replication.

Electricity can’t be efficiently moved around the globe, but with the Internet Computer, compute can. In other words, we’d be using Chain Key to enable a subnet to “follow the sun”. A blockchain network that’s more environmentally friendly than the traditional cloud? Now that would turn heads.

Notes:

- I suppose this would require tweaks to the remuneration structure for node providers, so as not to overly penalise correctly-handled downtime.

- I guess this could be done with without any random shuffling, actually, so should this be a new topic instead?

This sounds so cool

Hello! I think that shuffling node membership makes network not more secure, but less secure, at some point of view.

Here is the explanation of my thesis.

If I’m the malicious node and I’m monitoring the state of my subnet (to gather sensitive data), then to gather all the data I need to have my node or node of my partners at every subnet. It’s quite hard to do and at the moment to achieve the goal of knowing the whole state I need to control/partner-with about 1/13 of the nodes. But when I’m going to be shuffled across the subnets, I just need to wait while the whole state will be gathered.

What do you think? Am I missing something?

What you @akup are describing is data privacy, and yes, the data would be less private with node shuffling (unless some sort of encryption were used to obscure the data from the node owner). However, the “security” of a subnet being discussed above was regarding how many nodes would need to gang up to manipulate or crash the data fidelity in their subnet, which is a harder feat.

I definitely understand this. Just thought that it should be mentioned by authority for the community ![]() Thanks!

Thanks!

Really interesting idea, although that would mean a subnet’s nodes are no longer geographically distributed across the world. That could hurt latency (like for a DFN) as well as hurt decentralization (e.g. at night a subnet could be fully running in a single geopolitical region of the world).

Maybe instead of completely zero-carbon subnets, we could tweak the node incentives or shuffling algorithm to prioritize renewable energy data centers (although I don’t know how you would prove that you’re using renewable energy…).

@MalcolmMurray thank you for raising this point, which should have greater prominence, maybe even a dedicated thread.

Looking into the environmental impact of blockchain txts and nfts, etc. led me to the IPFS (FileCoin) website where they describe their approach. Firstly, they publish all energy usage. Secondly, they trialed a scheme at the end of last year where “bakers” (their miners) are awarded certificates for purchasing energy from renewable sources. If successful, it looks like peeps publishing / storing on IPFS can choose to have their data hosted by these certificated bakers (not a hundred percent sure I have the terms right, but am sure you get what I mean).

I’m very interested to understand the environmental impact of different dapps and activities on the IC from a climate impact perspective.

Personally, transparent reporting of energy consumption just feels like a no-brainer starting point.

Does this fall into the realm of a community proposal to the NNS?

Thanks @NickM

I started a thread a while ago, which didn’t gain much traction: Zero-carbon subnets

Yes, I’d love to see an NNS proposal for the community to voice sustainability as a priority and a potential competitive advantage for the Internet Computer.

Thanks @MalcolmMurray, I have added to that thread ![]()