Some History

Yral, as the name suggests, has been designed and architected to “go viral”. We have always endeavoured to build an app that can scale and absorb traffic for millions of users. However, every time we start driving traffic, we’ve been bottlenecked by constraints and limits imposed by the IC network

We had started with a single canister architecture for the very first version of our app which was written in Motoko back in late 2021 - early 2022 back when we were called GoBazzinga. It started receiving some initial traffic but around the 10,000 active users mark we noticed significant bottlenecking and slowdowns which led us to realize that a single canister (which is single threaded) can only scale so much.

So, around late 2022, when we launched a new game and we rebranded to Hot or Not, we rewrote our entire backend to a multi canister system, where we would spin up individual canisters for every individual signing up. This would ensure that every user signing up would have enough compute/storage/bandwidth resources that they needed to do whatever we could possibly imagine for them to do.

We were acutely aware of the limitations of a single subnet, however, we’ve had multiple conversations with the DFINITY team during that time that subnet splitting was actively being worked on and would be available soon. Subnet splitting essentially allows a subnet to split into two and divide canisters into two groups and divide all canisters into two different subnets. We were hoping this would be able to solve our scaling challenges as every time a subnet for us became full, we would just split the subnet to two and continue scaling.

In the meantime, the Hot or Not game was a hit and we quickly started getting traction as the subnet started filling up. At its peak, we had 300k active users with 50k users signed in with their own canisters. At this point, we had to spill over to more subnets to be able to scale further. However, subnet splitting was still not available and we had to shut down sign-ups to the game as we were running out of canisters to spin up for new users.

This was devastating for us as we had to SHUT DOWN sign-ups to our app for MONTHS. All of this, while we were trying to complete our SNS sale. That was another whole can of worms. You think testing for SNS is difficult now? Imagine what it was like for us doing it as the 2nd ecosystem app on the IC doing the SNS sale independently.

So we do empathise with @jamesbeadle as we look at instances like this as we sense their clear frustration with what that entire process looks like.

Where we currently are

However, with a lot of sleepless nights and loads of effort, we managed to complete our SNS sale. Shout out to all of our investors and all the supportive friends who’d believed in us till this stage.

At this stage, we had a successful SNS sale and we had a treasury with which to fund our subsequent growth story.

This time, we truly wanted to build a backend that could scale to millions of signed in users and not have to rely on Dfinity shipping features like subnet splitting for us to scale.

So, we started building our own multi-subnet, multi-canister, dynamically load balancing backend. It required us to keep our heads down and build. During this time, we had to deal with a lot of criticism as we were not shipping any user facing features. We were also walking a path that no one had walked before and hence there was a certain uncertainty as to whether we would be able to pull it off.

However, early this year, we shipped our new backend that could dynamically load balance between multiple subnets and multiple canisters. And we were itching to put it through its paces and stress the entire network.

In the meantime, we shipped a bunch of user facing features like:

- a completely new authentication mechanism that takes seconds to sign up instead of tens of seconds like II

- a frontend written in Rust and WASM for the fastest experience that browsers can offer

- a AI powered video recommendation feed that can recommend videos to users based on their preferences

Subsequently, we looked around at the market and noticed that memecoins are the flavour of the season and with our new systems in place, we could ship a memecoin creation platform in weeks that could rival and beat the best of the best and do it ALL ON CHAIN with support for ALL the open ICRC standards.

As we’ve started to scale the meme token platform, we’ve enabled every user to spin up their own tokens WITHOUT even needing to SIGN UP or PAY A SINGLE DOLLAR IN CYCLE COSTS. And we do it ALL ON CHAIN.

However, as we start to scale, almost every other app on the IC has started to bottleneck as we start to massively drive traffic to the IC on all the subnets.

Here’s @Manu from Dfinity acknowledging that most of the load is from Yral presence on all the subnets. He’s since retracted his original statement, but due to the way Discourse functions, you can still see it on this reply

Here’s links to a couple of threads which all raise the issue of instability and bottlenecks arising from us starting to drive traffic to all the subnets on the IC:

- Subnets with heavy compute load: what can you do now & next steps

- https://forum.dfinity.org/t/subnet-lhg73-is-stalled/35174

- Stability issue

- Ingress_expiry not within expected range error

- Response verification failed: Invalid tree root hash

You can find way more, all originating around 20 or so days back, when we started with our first airdrop as documented here:

This entire effort also required a significant amount of cycles to fund. This is 1 such screenshot of us converting to cycles to fund our canisters.

We did this 4-5 times in the last 2-3 weeks. We’ve spent close to $200,000 in cycle costs in the last 2 months owing to how regressive cycle reservation and inefficient the current infrastructure is.

What are we asking for

As I was writing this, I refrained from posting the original draft as there was a lot of frustration that was surfacing from the REPEATED ROADBLOCKS that we run into every time we START SCALING AND DRIVING TRAFFIC, GAIN MOMENTUM, ONBOARD USERS but the momentum gets KILLED due to network constraints and bottlenecks that are outside our control.

This time as well, we’ve been noticing the short term discussion seems to be towards raising cost to the point that we’re artificially constraining high growth apps.

There’s recently been a new change to how subnets charge canisters called the “cycle reservation mechanism” which essentially increases the cost of cycles on a subnet exponentially as the subnet starts to fill up. This is essentially a mechanism to artificially constrain growth of apps on a subnet.

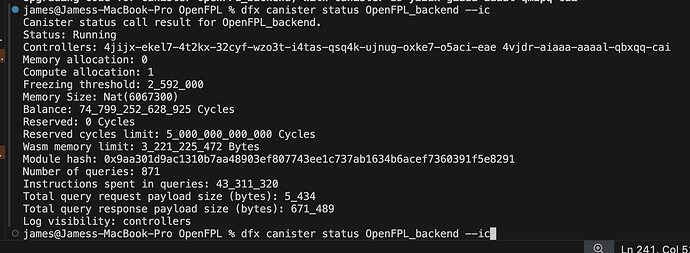

We’re affected significantly by this as some of the subnets that we’re on have started to have cycle costs where canisters are starting to reserve 2-5 Trillion cycles for a single canister. Imagine the possible cost for a fleet of canisters that currently number > 200_000 canisters and that intend to grow to a number larger than 1 Million canisters

We’re not asking for a free ride. We’re asking for a fair shot at being able to scale and grow our app without being artificially constrained by the network.

IF the IC intends to be a world computer, it needs to be able to handle a measly growth of tens of thousands of canisters without breaking a sweat. We’re not even talking about millions of canisters yet.

We’re asking for focus on improving the protocol drastically to add mechanisms for

- easy migrations

- load detection

- dynamic load balancing

Exponentially growing cycle costs on subnets to limit apps from growing citing aversion to spiky traffic is absolutely the wrong way to go. The entire internet operates on spiky traffic from Black Friday sales to Taylor Swift concert ticket sales.

If the IC wants to grow, it needs to support the apps that are trying to scale and bring new users onboard instead of artificially trying to constrain their growth.

We will however continue building and breaking new ground, no matter what it takes.

Thank you for patiently reading through this.

– Just A frustrated developer trying to scale on the IC