I am coming to this problem a few times and it seems there is no way around it but to figure it out.

Goal

Have something like SQL

SELECT * FROM something WHERE skill > 20 and fireballs < 10 ORDER BY name

Or something like the MongoDB syntax which seems to be widely adopted too.

const queryResults = await myDatabase.heroes // 'heroes' should be the collection name

.find()

.where({

'skills.name': 'fireball', // Searching within the skills array for a skill with the name 'fireball'

'birthyear': {

$gte: 1940, // Born on or after 1940

$lte: 1950 // Born on or before 1950

}

})

.sort({name: 1})

.limit(5)

.exec(); // Execute the query

Problems

When creating a library that tries to make the above examples work: One of the biggest obstacles is the inability of Motoko to have functions that accept any type and work on data structures without knowing what they are. In JS you can do obj.properyname or obj["propertyname"] and address something. In Motoko, if implemented it would work similarly to how arrays work. A function doesn’t have to specify exactly how many members an array needs to have. If someone tries to access a non-existent member arr[23234] an exception is thrown. Is the Motoko team planning on adding such property addressing?

The workaround is to use Candy library. But then if you use that or the ‘untyped addressing’, we are losing the benefits coming from the type system.

The Candy Utils can now do things like

let candy4 = get(candy, path("books[author.name == $.mostPopularAuthor].pageNumber")));

let candy5 = get(candy, path("books[author.name == $.mostPopularAuthor | pageNumber < @.numberOfPurchases].pageNumber"));

which will definitely save time. Making that or SQL syntax or MongoDB syntax work with native Motoko structures without Candy (not sure how to call them) is impossible.

That leaves us with one more option, to create some kind of interface that gets it done without circumventing the type system. I will try to do that with the query above.

const queryResults = heroes.find()

.where([

#func(func(x) { x.skills.name == 'fireball' }),

so far so good. We have converted 'skills.name': 'fireball' to something that is alright.

#func(func(x) { x.birthyear < 1940 and x.birthyear > 1950 }),

this will work if we are doing full table scans. (scanning the whole memory without using indexing).

But we do need indexing, this means something like that should be the syntax

#index("birthyear", [#gt(1940), #lt(1950)])

now how will the sorting work, it also should allow indexed sorting or non indexed sorting

#sort_index("name", #asc)

or

#sort_func(func(a,b) { a.created > b.created })

and we end with

#limit(5)

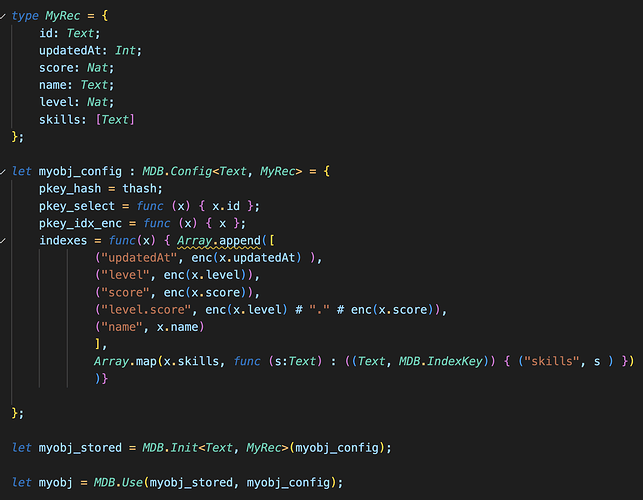

How will the initialization look like:

type MyType = {

name : Text;

birthyear: Nat;

skills:[{name: Text}]

}

stable var heroes = MDB<MyType>(

{

//... other config options

indexes: [

("name", func(x) { x.name }),

("birthyear", func(x) { lexicographic.encode(x.birthyear) })

]

}

I think the last approach is my personal favorite. It gets a bit getting used to, but it is kind of intuitive - because you can’t do it in another way (that I can think of). What do you think?

We can set a bounty for this once we nail the interface designs and some requirements. It’s already possible to do with @ZhenyaUsenko Map and Set and @icme 's BTree